Object conversion rule not found. An example of an object conversion rule. Real world problems

Migrating data between different configurations is not a trivial task. As always, there are several solutions, but not all of them are optimal. Let’s try to understand the nuances of data transfer and choose a universal strategy for resolving such issues.

The problem of data migration (we are talking purely about 1C company products) from one solution to another did not arise yesterday. The 1C company understands perfectly well what difficulties developers face when creating migrations, so it tries in every possible way to help with tools.

During the development of the platform, the company introduced a number of universal tools, as well as technologies that simplify data transfer. They are built into all standard solutions and the problem of migrations between identical configurations has generally been resolved. The victory is once again confirmed by the close integration of standard solutions.

With migrations between non-standard solutions, the situation is somewhat more complicated. A wide selection of technologies allows developers to independently choose the optimal way to solve a problem from their point of view.

Let's look at some of them:

- exchange via text files;

- use of exchange plans;

- etc.

Each of them has its own pros and cons. To summarize, the main disadvantage will be its verbosity. Independent implementation of migration algorithms is fraught with significant time costs, as well as a long debugging process. I don’t even want to talk about further support for such decisions.

The complexity and high cost of support prompted the 1C company to create a universal solution. Technologies that make it possible to simplify the development and support of migrations as much as possible. As a result, the idea was implemented in the form of a separate configuration – “Data Conversion”.

Data conversion - standard solution, independent configuration. Any user with an “ITS:Prof” subscription can download this package completely free of charge from the user support site or the ITS disk. Installation is performed in a standard way - like all other standard solutions from 1C.

Now a little about the advantages of the solution. Let's start with the most important thing - versatility. The solution is not tailored to specific platform configurations/versions. It works equally well with both standard and custom configurations. Developers have a universal technology and a standardized approach to creating new migrations. The versatility of the solution allows you to prepare migrations even for platforms other than 1C:Enterprise.

The second big plus is visual aids. Simple migrations are created without programming. Yes, yes, without a single line of code! For this alone, it’s worth spending time learning the technology once, and then using invaluable skills repeatedly.

The third advantage I would note is the absence of restrictions on data distribution. The developer himself chooses the method of delivering data to the receiver configuration. There are two options available out of the box: uploading to an xml file and direct connection to the infobase (COM/OLE).

Studying architecture

We already know that data conversion can work wonders, but it is not yet entirely clear what the technical advantages are. The first thing you need to understand is that any data migration (conversion) is based on exchange rules. Exchange rules are a regular xml file describing the structure into which data from the information security will be uploaded. The service processing that uploads/downloads data analyzes the exchange rules and performs the upload based on them. During loading, the reverse process occurs.

The “CD” configuration is a kind of visual constructor with the help of which the developer creates exchange rules. It does not know how to download data. Additional external service processing included in the CD distribution package is responsible for this. There are several of them (XX in the file name is the platform version number):

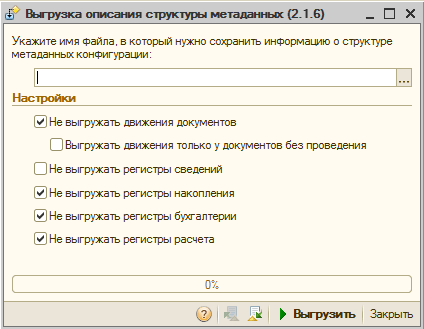

- MDXXExp.epf- processing allows you to upload a description of the infobase structure to an xml file. The structure description is loaded into the CD for further analysis and creation of exchange rules.

- V8ExchanXX.epf- uploads/downloads data from the information base in accordance with the exchange rules. In most typical configurations, processing is present out of the box (see the “Service” menu item). Processing is universal and is not tied to any specific configurations/rules.

Okay, now, based on all of the above, let’s define the stages of developing a new conversion:

- Definition of the task. It is necessary to clearly understand what data needs to be transferred (from which configuration objects) and, most importantly, where to transfer it.

- Preparation of descriptions of configuration structures (Source/Sink) for subsequent loading into the CD. The problem is solved by service processing MDXXExp.epf.

- Loading prepared descriptions of structures into information security.

- Creating exchange rules using a visual CD tool.

- Performing upload/download according to the created data conversion rules using V8ExchanXX.epf processing.

- Debugging exchange rules (if necessary).

The simplest conversion

For the demonstration we will need two deployed configurations. I decided to go with the option: “Trade Management” 10th edition and a small home-written solution. The task will be to transfer data from the standard “UT” configuration. For brevity, let’s call the self-written solution “Sink”, and the trade management “Source”. Let’s start solving the problem by transferring elements from the “Nomenclature” directory.

First of all, let's take a look at the data conversion scheme and re-read the list of actions that need to be done. Then we launch the “Source” configuration and open the MD82Exp.epf service processing in it.

The processing interface does not have an abundance of settings. The user only needs to indicate the types of metadata objects that will not be included in the structure description. In most cases, these settings do not need to be changed, because There is no particular point in unloading movements using accumulation registers (as an example).

It is more correct to form the movement while holding documents in the receiver. All movements will be made by the document itself after the transfer. The second argument in defense of the default settings is the reduction in file size with uploading.

Some documents (especially in standard configurations) generate movements across multiple registers. Unloading all this stuff will make the resulting XML file too large. This may complicate subsequent transportation and loading into the receiver base. The larger the data file, the more RAM will be required to process it. During my practice, I had the opportunity to encounter indecently large upload files. Such files completely refused to be parsed using standard tools.

So, we leave all the default settings and upload the configuration description to a file. We repeat a similar procedure for the second base.

Open the CD and select in the main menu “Directories” -> “Configurations”. The directory stores descriptions of the structures of all configurations that can be used to create conversions. We load the configuration description once, and then we can use it multiple times to create different conversions.

In the directory window, click the button “ Add” and in the window that appears, select the file describing the configuration. Check the “Load into a new configuration” checkbox and click on the “Load” button. We perform similar actions with the description of the structure of the second configuration.

Now you are ready to create exchange rules. In the main CD menu, select “Directories” -> “Conversions”. Add a new element. In the window for creating a new conversion, you need to specify: the source configuration (select UT) and the destination configuration (select “Receiver”). Next, open the “Advanced” tab and fill in the following fields:

- exchange rules file name - the created exchange rules will be saved under this name. You can change the file name at any time, but it is best to set it now. This will save time in the future. I named the rules for the demo example: “rules-ut-to-priemnik.xml”.

- name - the name of the conversion. The name can be absolutely anything, I limited myself to “Demo. UT to Receiver.”

That’s it, click “Ok”. Immediately a window appears in front of us asking us to create all the rules automatically. Agreeing to such a tempting offer will give the master a command to automatically analyze the description of the selected configurations and independently generate exchange rules.

Let’s dot the “i’s” right away. The wizard will not be able to generate anything serious. However, this possibility should not be discounted. If it is necessary to establish an exchange between identical configurations, then the services of a specialist will be very useful. For our example, manual mode is preferable.

Let’s take a closer look at the “Exchange Rules Settings” window. The interface may seem a little confusing - a large number of tabs crammed with controls. In fact, everything is not so difficult; you begin to get used to this madness after a few hours of working with the application.

At this stage, we are interested in two tabs: “Object conversion rules” and “Data upload rules”. At first, we must configure the matching rules, i.e. compare objects of two configurations. On the second, determine possible objects that will be available to the user for uploading.

In the second half of the “Object Conversion Rules” tab there is an additional panel with two tabs: “Property Conversion” and “ Converting values" The first will select the properties (details) of the selected object, and the second is necessary for working with predefined values (for example, predefined directory elements or enumeration elements).

Great, now let's create conversion rules for directories. You can perform this action in two ways: use the Object Synchronization Wizard (the “” button) or add correspondence for each object manually.

To save space, we will use the first option. In the wizard window, uncheck the group “ Documentation” (we are only interested in directories) and expand the group “ Directories" We carefully scroll through the list and look at the names of reference books that can be compared.

In my case, there are three such directories: Nomenclature, Organizations and Warehouses. There is also a directory called Clients, which serves the same purpose as “ Counterparties"from configuration" UT" True, the master could not compare them due to their different names.

We can fix this problem ourselves. We find in the window “ Object Matches» reference book « Clients", and in the "Source" column select the "Counterparties" directory. Then check the box in the “Type” column and click the “Ok” button.

The Object Synchronization Wizard will offer to automatically create rules for converting properties of all selected objects. The properties will be compared by name and for our demonstration this will be quite sufficient, we agree. The next question will be a proposal to create upload rules. Let's agree to it too.

The basis for the exchange rules is ready. We selected the objects for synchronization, and the rules for converting properties and uploading rules were created automatically. Let’s save the exchange rules to a file, then open the IB “Source” (in my case it’s UT) and launch service processing in it V8Exchan82.epf.

First of all, in the processing window, select the exchange rules we created. We answer the question of loading rules in the affirmative. Processing will analyze the exchange rules and build a tree of objects of the same name available for uploading. For this tree, we can set up all sorts of selections or exchange nodes, by changing which we need to select data. We want to download absolutely all the data, so there is no need to install filters.

After completing the process of uploading data to a file, go to IB “ Receiver" We also open processing in it V8Exchan82.epf, only this time we go to the “Data Loading” tab. Select the data file and click the “Download” button. That's it, the data has been successfully transferred.

Real world problems

The first demo could be misleading. Everything looks quite simple and logical. Actually this is not true. In real work, problems arise that are difficult or completely impossible to solve using visual means alone (without programming).

In order not to be disappointed with the technology, I prepared several real-life problems. You will definitely come across them at work. They don’t look so trivial and make you look at data conversion from a new angle. Carefully consider the presented examples, and feel free to use them as snippets when solving real problems.

Task No. 1. Fill in the missing details

Suppose we need to transfer the directory “ Counterparties" The receiver has a similar “Clients” directory for this purpose. It is completely suitable for data storage, but it has props “ Organization”, which allows you to separate counterparties by belonging to the organization. By default, all counterparties must belong to the current organization (this can be obtained from the constant of the same name).

There are several solutions to the problem. We will consider the option of filling out the details “ Organization“right in the database” Receiver”, i.e. at the time of data loading. The current organization is stored in a constant, therefore, there are no barriers to obtaining this value. Let’s open the object conversion rule (hereinafter referred to as PKO) “ Clients” (double click on the object) and in the rules setup wizard, go to the “Event Handlers” section. In the list of handlers we will find “ After downloading”.

Let's describe the code for obtaining the current organization and then assigning it to the details. At the time the “After loading” handler is triggered, the object will be fully formed, but not yet written to the database. Nobody forbids us to change it at our discretion:

If NOT Object.ThisGroup Then Object.Organization = Constants.CurrentOrganization.Get(); endIf;

Before filling out the details " Organization"It is necessary to check the value of the attribute " This group" For the reference book " Clients"The hierarchical feature is set, so checking for the group is necessary. Fill in any details in a similar way. Be sure to read the help for other handler options " AfterLoading" For example, among them there is the parameter “ Refusal" If you assign it the value “True”, then the object will not be written to the database. Thus, it becomes possible to limit the objects that can be written at the time of loading.

Task No. 2. Details for the information register

In the directory “ Counterparties"UT configurations, details available" Buyer" And " Provider" Both details are of type “ Boolean” and are used to determine the type of counterparty. In IB “ Receiver”, at the directory “ Clients“There are no similar details, but there is a register of information” Types of Clients" It performs a similar function and can store multiple attributes for one client. Our task is to transfer the values of the details into separate entries in the information register.

Unfortunately, visual means alone cannot cope here either. Let’s start small, create a new software for the information register “ Types of Clients" Do not cite anything as a source. Avoid automatically creating upload rules.

The next step is to create the upload rules. Go to the appropriate tab and click the “ Add" In the window for adding upload rules, fill in:

- Sampling method. Change to “Arbitrary algorithm”;

- Conversion rule. Select the information register “Types of Clients”;

- Code (name) of the rule. Write it down as “Uploading Client Types”;

Now you need to write code to select data for uploading. The parameter “ Data Sampling" We can place a collection with a prepared data set in it. Parameter " Data Sampling” can take on various values - query result, selection, collections of values, etc. We initialize it as a table of values with two columns: client and client type.

Below is the code for the event handler “ Before processing" It initializes the parameter “ Data Sampling” followed by filling in data from the directory “ Counterparties" Here you should pay attention to filling out the column “ Client Type" In “UT” our attributes are of the “Boolean” type, and the recipient is an enumeration.

At this stage, we cannot convert them to the required type (it is not in the UT), so for now we will leave them in the form of strings. You don’t have to do this, but I immediately want to show how to cast to a missing type in the source.

DataFetch = New ValueTable(); DataSelection.Columns.Add("Client"); DataSelection.Columns.Add("ClientType"); SelectingDataFromDirectory = Directories.Accounts.Select(); While SelectingDataFromDirectory.Next() Loop If SelectingDataFromDirectory.ThisGroup Then Continue;

endIf; If Data Selection From Directory.Buyer Then NewRow = Data Selection.Add(); NewRow.Client = DataFetchFromDirectory.Link; Types of Clients NewRow.ClientType = "Customer";

endIf;

If DataFetchFromDirectory.Supplier Then NewRow = DataFetch.Add(); NewRow.Client = DataFetchFromDirectory.Link; NewString.ClientType = "Supplier";

endIf; EndCycle;

Let’s save the data upload rule and return to the “ tab

Object conversion rules

" Let's add for the information register “

To save space, I will not provide the code (you can always refer to the sources) of the request - there is nothing unusual in it. We sort through the resulting selection, and place the sorted results in the already familiar parameter “ Data Sampling" It is again convenient to use a table of values as a collection:

DataFetch = New ValueTable(); //There will be another table part here Data Selection.Columns.Add(“Products”); //Here too there will be a tabular part Data Selection.Columns.Add(“Services”); SelectionData.Columns.Add(“Link”);

Task No. 4. Transferring data to an operation

If an organization uses several accounting systems, then sooner or later there will be a need to migrate data with the subsequent generation of transactions.

In the configuration “ BP“there is a universal document” Operation” and it is ideal for forming more wires. There’s just one problem - the document is made cunningly, and the data cannot be transferred into it so easily.

You will find an example of such a conversion in the source code for the article. The amount of code turned out to be quite large, so there is no point in publishing it in conjunction with the article. Let me just say that uploading again uses an arbitrary algorithm in the rules for uploading data.

Task No. 5. Data synchronization across multiple details

We've already looked at several examples, but we still haven't talked about synchronizing objects during migration. Let's imagine that we need to transfer counterparties and some of them are probably in the receiver database. How to transfer data and prevent duplicates from appearing? In this regard, CD offers several ways to synchronize transferred objects.

The first one is by unique identifier. Many objects have a unique identifier that guarantees uniqueness within a table. For example, in the directory “ Counterparties” there cannot be two elements with the same identifiers. CD makes calculations for this and for all created PCOs, a search by identifier is immediately enabled by default. When creating the PCO, you should have noticed the image of a magnifying glass next to the object name.

Synchronizing using a unique identifier is a reliable method, but it is not always appropriate. When merging directories “ Counterparties” (from several different systems) it will not help much.

In such cases, it is more correct to synchronize objects according to several criteria. It is more correct to search for counterparties by INN, KPP, Name or split the search into several stages.

Data conversion does not limit the developer in defining the search criteria. Let's look at an abstract example. Suppose we need to synchronize directories “ Counterparties” from different information bases. Let’s prepare the PKO and in the object conversion rules settings, check the “ Continue searching search fields if the receiver object is not found by identifier" With this action, we immediately defined two search criteria - by a unique identifier and custom fields.

We have the right to choose the fields ourselves. By checking TIN, KPP, and Name, we will immediately indicate several search criteria. Comfortable? Quite, but again this is not enough. What if we want to change the search criteria? For example, first we search for the TIN+KPP combination, and if we don’t find anything, then we start trying our luck with the name.

Such an algorithm is quite capable of being implemented. In the event handler “ Search fields” we can specify up to 10 search criteria and for each of them define its own composition of search fields:

If SearchOptionNumber = 1 then SearchPropertyNameString = “TIN, KPP”; OtherwiseIfSearchOptionNumber = 2 Then SearchPropertyNameString = “Name”; endIf;

There are always several solutions

Any task has several solutions, and transferring data between different configurations is no exception. Each developer has the right to choose his own solution path, but if you constantly have to develop complex data migrations, then I strongly recommend paying attention to the “”. You may have to invest resources (time) in training at first, but they will more than pay off on the first more or less serious project.

In my opinion, the 1C company unfairly ignores the topic of using data conversion. During the entire existence of the technology, only one book was published on it: “1C: Enterprise 8. Data conversion: exchange between application solutions.” The book is quite old (2008), but it is still advisable to familiarize yourself with it.

Knowledge of platforms is still necessary

"is a universal tool, but if you plan to use it to create data migrations from configurations developed for the 1C:Enterprise 7.7 platform, then you will have to spend time getting to know the built-in language. The syntax and ideology of the language are very different, so you will have to spend time learning. Otherwise the principle remains the same.

Print (Ctrl+P)

Handler Before Recording Received Data

Procedure PKO_<ИмяПКО>_Before Recording the Received Data in the general module Exchange Manager Through Universal Format contains the handler text Before Recording the Received Data for a specific PKO. The handler text may be empty. However, in practice it is always used when loading data to implement additional logic that must be performed before writing an object to the infobase. For example, should changes be loaded into existing information security data or should they be loaded as new data.

The handler contains the following parameters;

- InformationB data– Type – DirectoryObject, DocumentObject. An infobase data element corresponding to the received data. If no matching data is found, then this parameter has the value Undefined .

- ReceivedData– Type – DirectoryObject or DocumentObject. A data element generated by converting XDTO data. Recorded if this data is new for the infobase (the IB Data parameter contains the value Undefined ). Otherwise ReceivedData replace InformationB data(all properties from ReceivedData transferred to InformationB data). If the standard replacement of information security data with the received data is not required, you should write your own transfer logic, and then install ReceivedData meaning Undefined

- ConvertingProperties. Type - Table of values. Contains rules for converting properties of the current object, initialized as part of the exchange session.

- ComponentsExchange. A structure that contains exchange components: exchange rules and exchange parameters. The procedure for initializing exchange components is located in the module Data ExchangeXDTOServer

Let's look at some practical examples that I solved in an advanced configuration, so as not to change the basic configuration of typical 1C application solutions.

Do not replace found objects when loading

In the rules for converting objects of edition 3.0, unlike edition 2.0, there is no property “Do not replace found objects when loading”, thanks to which the found objects in the receiver infobase will not be changed by the value of the synchronizing fields.

In the object conversion rules of edition 3.0, the parameter InformationB data has the value undefined if the object is not found. In addition, if the parameter ReceivedData has significance undefined then when exiting the handler, m will not be replaced.

The employer asked me to change the conversion rules between the standard configurations of UT 11 and BP 3.0 so that the data from the directory of organizations and warehouses in the accounting department would not be sold when exchanging with UT. They especially sold the additional details of these directories to the accounting department, every time when elements of these directories were registered in UT for sending to the accounting department.

I performed this task in the accounting configuration extension so as not to change the main configuration. The solution is shown in Fig. 1 . If the directory element exists (found in the source), then the parameter InformationB data is defined and that all properties from ReceivedData NOT transferred to InformationB data should be installed ReceivedData meaning Undefined

Fig 3 Program code fragment in the configuration extension

If the directory object is not found, then the parameter InformationB data has the meaning Uncertain and then I call the procedure ContinueCall to continue calling the event handler from the extensible configuration

Do not reflect documents in regulated accounting

I was asked to make it possible not to reflect in accounting 3.0 some shipping documents that are created in trade management 11. For this purpose, I introduced an additional detail of the implementation document “Do not reflect documents in regulated accounting.” If the flag is set, then this document must be marked for deletion in the receiver database (BP 3.0). The complexity of this task lies in the fact that in the accounting department of the enterprise the documents do not have additional details. I decided to use the comment field. When sending on the source side (UT 11), I fill in the comments attribute with the appropriate inscription, and on the receiver (BP), in the handler, before recording the received data, I set a mark for deletion as shown in Fig. 2

Good day, dear blog readers. An article about conversion was previously published on this site

, this article showed

how you can set up an exchange using constructors that create exchange rules.

This method can be used when converting databases from 1C version 7.7 to 8.2.

Now we will talk about how to transfer data between 1C 8.2 configurations, which are slightly different from each other.

The main focus of this article will be on converting the tabular part of the document, which means that

we will work with property group conversion rules - PKGS.

Preparing to set up PKGS - rules for converting a group of properties

We will transfer the “Receipt of Goods and Services” document, which has differences in the VAT Rate attribute of the “Goods” tabular section.

in the source and receiver database. In the source database, this attribute has the type “DirectoryLink.VAT Rates”,

and in the receiver database - the type “TransferLink.VAT Rates”.

By the way, for convenience, you can define

In addition, in the receiver database we need to fill in the “Account BU” detail, which is also located

in the tabular section “Goods” of the document “Receipt of Goods and Services”. We will take the data to fill out from the “Account Accounting” details.

reference book “Nomenclature” of the receiver base.

The situation is complicated by the fact that we will be working with the tabular part, so we need to configure

property group conversion rules - PKGS. We will need to access the current row of the tabular section.

Creating conversion rules for a group of 1C properties

We have already developed conversion rules for the “Receipt of Goods and Services” document.

But for the “Products” tabular part there is no property conversion rules"VAT rates".

You need to add a new property conversion rule by clicking on the “Synchronize properties...” button.

The dialog “Setting up property conversion rules (Receipt of Goods and Services)” will appear.

You need to repeat what was done in the image and click the “OK” button.

Although we have created conversion rule for a property group, but it's not ready yet.

Remember that the details of the tabular section “VAT Rates” differ in the types of values.

In the source database, this attribute has the type “DirectoryLink.VAT Rates”,

and in the receiver database - the type “TransferLink.VAT Rates”. We're missing a rule here

Converting from a directory to an enumeration.

Event handlers for property group conversion rules

To configure property conversion correctly, you need to create a new object conversion rule.

In the dialog that appears, we indicate that the “VAT Rates” directory is converted into a transfer with the same name.

There will be no property conversion rules for this rule.

Therefore, when saving this rule, select “No” in the dialog that appears.

In the dialogue with the question “Create data upload rules?” Let’s also select “No”.

By double-clicking, we will open a dialog with setting up the object conversion rule (PKO) “VAT Rates”.

Here, on the “Event Handlers” tab, select the “On unloading” event and define the “Source” and

“Link Node”, that is, what will be transferred.

LinkNode = "Bid0" ;

ElseIf Source. Bet = 12 Then

NodeLinks = "Bet12" ;

ElseIf Source. Name = “excluding VAT” Then

NodeLinks = "Without VAT" ;

EndIf ;

After writing the handler, click the “OK” button.

In information on handlers:

Source - Custom - uploaded source object (link or custom data).

Link Node - initialized xml link node. Can be used

for example, to initialize properties of other objects.

Now we will explicitly indicate the use of this object conversion rule when unloading the “VAT Rate” attribute.

Go to the “Properties Conversion (*)” tab of the “Receipt of Goods and Services” document and open the conversion

property group “Products”, double-click on the “VAT Rates” property and in the dialog that opens, in the “Rule” field

select the conversion rule for the “VAT Rate” object.

Click the “OK” button.

Now we just need to set up accounting accounts in accordance with the values that are defined for the item.

Let’s go to the “Object Conversion Rules” tab, find the “Receipt of Goods and Services” object and

Double-clicking on it will open the object conversion rules (OCR) dialog.

Let’s go to the “Event Handlers” tab for the “After loading” event and write the following:

LineTC. AccountAccountBU = LineTC. Nomenclature. AccountAccountBU;

EndCycle ;

Now let's load these rules at the source using the external processing "Universal Data Interchange in XML Format" - "V8Exchan82.epf".

Let's upload the data to an xml file. Then, open the same processing in the receiver database and select the upload xml file and load the data.

By the way, the “Universal Data Interchange in XML Format” processing can be opened through the menu item

"Service" | "Other Data Exchanges" | "Universal Data Interchange in XML Format". A little was written about this in a note about.

The purpose of this exchange rule is to transfer balances on mutual settlements from BP 2 to UT11.

Step-by-step creation of an exchange rule using the "Data Conversion" configuration (metadata must be loaded):

1) Create a rule for uploading an object; for this, go to the “Rules for uploading data” tab, click add. In the window that appears, select the sample object; this will be a self-accounting register. We change the sampling method to an arbitrary algorithm.

2) Let’s move on to writing the code itself because There is no self-accounting register in the UT, so we must convert it. First, we need a query that, according to our parameters, will return balances for mutual settlements. In the "Before processing" event handler we write the following request:

QueryText = "SELECT

| Self-supporting Balances.Account,

| Self-supporting Balances.Subconto1 AS Subconto1,

| ISNULL(SUM(Self-AccountingRemaining.AmountRemainingDt),0) AS AmountRemainingDt,

| ISNULL(SUM(Self-AccountingRemaining.AmountRemainingCt),0) AS AmountRemainingCt,

| MAXIMUM(Cost Accounting Balances.Subaccount2.Date) AS Date of SettlementDocument,

| MAXIMUM(Self-Accounting Balances.Subaccount2.Number) AS Accounting Document Number

|FROM

| Accounting Register. Self-supporting. Balances (&OnDate, Account = &account,) AS Self-supporting Balances

|WHERE

<>&group and

| Self-supporting Balances. Sub-account 1. Parent<>&group1

|GROUP BY

| Self-supporting Balances.Account,

| Self-supporting Balances. Sub-account 1,

| Self-supportingRemains.Subconto2

|ORDER BY

| Subconto1

|AUTO ORDER";

My task was to limit the groups of counterparties for which mutual settlements are uploaded.

We determine the values of the variables that will be used in the future.

OnDate = date("20130101");

TD = CurrentDate();

group = Directories.Counterparties.FindByName("Buyers");

group1 = Directories.Counterparties.FindByName("Returns from INDIVIDUALS");

We create a table that we will later pass to the value conversion rule.

TZ = New ValueTable();

TK.Columns.Add("Counterparty");

TK.Columns.Add("Amount");

TK.Columns.Add("AmountREGLE");

TK.Columns.Add("CalculationDocument");

TK.Columns.Add("Settlement Document Date");

TK.Columns.Add("Settlement Document Number");

TK.Columns.Add("Partner");

TK.Columns.Add("Currency of Mutual Settlement");

TK.Columns.Add("Payment Date");

We set the parameters, call the request, fill out the table and call the conversion rule.

request = new Request(RequestText);

request.SetParameter("group", group); request.SetParameter("group1",group1);

request.SetParameter("OnDate",OnDate);

request.SetParameter("Account", Charts of Accounts. Self-accounting. Calculations with Other Suppliers and Contractors);//76.05

Fetch = request.Run().Select();

TZ.clear();

While Select.Next() Loop

if Sample.SumRemainingCT = 0 or Sample.SumRemainingCT = "" then

continue;

endif;

if Sample.AmountRemainderCT< 0тогда

report(""+Sample.Subconto1+" negative value "+Sample.SumRemainingCT);

endif;

LineTZ = TZ.Add();

LineTZ.Counterparty = Selection.Subconto1;

LineTZ.sum = Selection.SumRemainingCT;//Selection.SumRemainingCT;

LineTZ.sumRegl = Sampling.SumRemainingCT;//Sampling.SumRemainingCT;

Line TK.Calculation Document Date = Selection.Calculation Document Date;

Line TK.Calculation Document Number = Selection.Calculation Document Number;

LineTZ.PaymentDate = TD;

EndCycle;

OutData = New Structure;

OutgoingData.Insert("Date", CurrentDate());

OutgoingData.Insert("CalculationsWithPartners", TK);

OutgoingData.Insert("Operation Type", "Balances of Debts to Suppliers");

OutgoingData.Insert("Comment", "Generated on account credit 76.05");

report("76.05 CREDIT start");

UploadByRule(, OutgoingData, "Input of Balances for Mutual Settlement_7605Credit");

We similarly perform the same operation for the remaining necessary accounts (their description, as well as the ready-made rule, is in the attachment).

3) Let’s move on to creating object conversion rules; to do this, open the “Object Conversion Rules” tab. Let's add a new rule there with the name "Input Balances By Mutual Settlement_7605Credit", leave the source object empty, set the receiver object to the document "Enter Balances", and on the settings tab remove the flag "Search the receiver object by the internal identifier of the source object".

In the "Before loading" event handler we will write the following code:

GenerateNewNumberOrCodeIfNotSpecified = true;

In the "After loading" event handler we will write:

execute(algorithms.AfterLoadInputRemainings);

it will execute an algorithm with the following content:

currency = Constants.RegulatedAccountingCurrency.Get();

object.Owner = SessionParameters.CurrentUser;

object.organization=parameters.organization;

for each page from object.calculationspartners loop

Page.SettlementDocument = Directories.Counterparty Agreements.empty link();

PageCurrencySettlements = currency;

if ValueFilled(page.counterparty.partner) then

p.partner = p.counterparty.partner;

otherwise

partners = Directories.Partners.FindByName(page.counterparty.Name);

if the desk<>Undefined and desks<>Directories.Partners.emptylink() then

p.partner = desk;

object2.Partner = desk;

object2.Write();

otherwise

execute(algorithms.AddPartner);

endif;

endif;

end of cycle;

This algorithm will be executed on the receiver side (BP). In addition to transferring balances for mutual settlements, there is the task of transferring counterparties, but the UT uses partners, so after generating the document, we check whether all counterparties and partners are in the receiver database; if for some reason they are not there, then we add them.

Adding contractors will implement the conversion rule for the “Counterparties” directory; you can create it in the same way as the previous rule, but allow the system to compare the necessary fields.

An algorithm was created for partners that is executed on the receiver side.

In order to execute the algorithm on the receiver side, you need to check the “Used when loading” flag in the upper right corner of the algorithm window (when editing it).

Below is the code for the "Add partner" algorithm:

nPartner = Directories.Partners.CreateItem();

nPartner.Name = page.counterparty.name;

nPartner.Comment = "Created when loading from BP";

nPartner.NameFull = page.counterparty.NameFull;

nPartner.Supplier = ?(find(page.counterparty.AdditionalInformation,"Supplier")>0,true,false);

nPartner.Client = ?(find(page.counterparty.AdditionalInformation,"Client")>0,true,false);

OtherRelations = ?(find(page.counterparty.AdditionalInformation,"Other")>0,true,false);

npartner.Write();

p.partner = npartner.link;

counterparty = Directories.Counterparties.FindByName(page.counterparty.Name);

object2 = counterparty.GetObject();

object2.Partner = npartner.link;

object2.Write();

Let's go back to the object conversion rule. Now we need to establish correspondence between the source and destination fields; this could have been done immediately before writing the code. In order to compare fields, in the lower tabular part there is a button for calling the “Properties Synchronization” wizard. In this wizard, we can either map the fields or leave them both without a source and without a destination. In our case, we leave all fields and PMs without a source.

After the required fields have been selected in the lower TC, for each field we set a flag in the “Get from incoming data” column. This flag indicates that the system will look for this field in the incoming data. It is important that the field name matches the name in the incoming data, otherwise a message will be displayed stating that the field was not found.

The text does not describe all the nuances of the process.