An example of a simple neural network in python. Neural network against DDoS

This time I decided to study neural networks. I was able to acquire basic skills in this matter over the summer and fall of 2015. By basic skills I mean that I can create a simple neural network from scratch. You can find examples in my GitHub repositories. In this article, I will give some explanations and share resources that you may find useful in your study.

Step 1. Neurons and feedforward method

So what is a “neural network”? Let's wait with this and deal with one neuron first.

A neuron is like a function: it takes several values as input and returns one.

The circle below represents artificial neuron. It receives 5 and returns 1. The input is the sum of the three synapses connected to the neuron (three arrows on the left).

On the left side of the picture we see 2 input values ( Green colour) and offset (highlighted in brown).

The input data can be numerical representations of two different properties. For example, when creating a spam filter, they could mean the presence of more than one word written in CAPITAL LETTERS and the presence of the word "Viagra".

The input values are multiplied by their so-called "weights", 7 and 3 (highlighted in blue).

Now we add the resulting values with the offset and get a number, in our case 5 (highlighted in red). This is the input of our artificial neuron.

Then the neuron performs some calculation and produces an output value. We got 1 because the rounded value of the sigmoid at point 5 is 1 (we'll talk about this function in more detail later).

If this were a spam filter, the fact that output 1 would mean that the text was marked as spam by the neuron.

Illustration of a neural network from Wikipedia.

If you combine these neurons, you get a directly propagating neural network - the process goes from input to output, through neurons connected by synapses, as in the picture on the left.

Step 2. Sigmoid

After you've watched Welch Labs' lessons, it's a good idea to check out Week 4 of Coursera's machine learning course on neural networks to help you understand how they work. The course goes very deep into mathematics and is based on Octave, while I prefer Python. Because of this I skipped the exercises and learned everything necessary knowledge from the video.

A sigmoid simply maps your value (on the horizontal axis) to a range from 0 to 1.

My first priority was to study the sigmoid, as it has figured in many aspects of neural networks. I already knew something about it from the third week of the above-mentioned course, so I watched the video from there.

But you won't get far with videos alone. For complete understanding, I decided to code it myself. So I started writing an implementation of a logistic regression algorithm (which uses a sigmoid).

It took a whole day, and the result was hardly satisfactory. But it doesn't matter, because I figured out how everything works. The code can be seen.

You don't have to do this yourself, since it requires special knowledge - the main thing is that you understand how the sigmoid works.

Step 3. Backpropagation method

Understanding how a neural network works from input to output is not that difficult. It is much more difficult to understand how a neural network learns from data sets. The principle I used is called

James Loy, Georgia Tech. A beginner's guide to creating your own neural network in Python.

Motivation: focusing on personal experience in studying deep learning, I decided to create a neural network from scratch without complicated educational library, such as, for example, . I believe that for a beginner Data Scientist it is important to understand the internal structure.

This article contains what I learned and hopefully it will be useful for you too! Other useful articles on the topic:

What is a neural network?

Most articles on neural networks draw parallels with the brain when describing them. It's easier for me to describe neural networks as mathematical function, which maps a given input to a desired output without going into detail.

Neural networks consist of the following components:

- input layer, x

- arbitrary quantity hidden layers

- output layer, ŷ

- kit scales And displacements between each layer W And b

- selection for each hidden layer σ ; in this work we will use the Sigmoid activation function

The diagram below shows the architecture of a two-layer neural network (note that the input layer is usually excluded when counting the number of layers in a neural network).

Creating a Neural Network class in Python is simple:

Neural network training

Exit ŷ simple two-layer neural network:

In the above equation, the weights W and the bias b are the only variables that affect the output ŷ.

Naturally, the correct values for the weights and biases determine the accuracy of the predictions. Process fine tuning weights and biases from the input data is known as .

Each iteration of the learning process consists of the following steps

- computing the predicted output ŷ, called forward propagation

- updating weights and biases, called

The sequential graph below illustrates the process:

Direct distribution

As we saw in the graph above, forward propagation is just a simple calculation, and for a basic 2-layer neural network, the output of the neural network is given by:

Let's add a forward propagation function to our Python code to do this. Note that for simplicity, we have assumed offsets to be 0.

However, we need a way to assess the “goodness” of our forecasts, that is, how far off our forecasts are). Loss function just allows us to do this.

Loss function

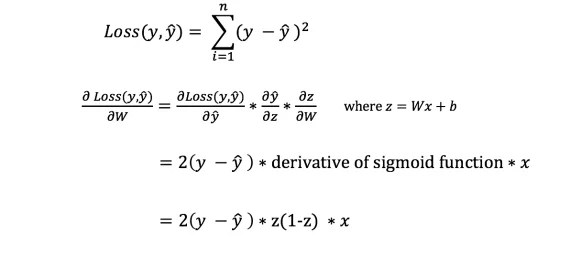

There are many loss functions available, and the nature of our problem should dictate our choice of loss function. In this work we will use sum of squared errors as a loss function.

The sum of squared errors is the average of the differences between each predicted and actual value.

The learning goal is to find a set of weights and biases that minimizes the loss function.

Backpropagation

Now that we have measured the error in our forecast (loss), we need to find a way propagating the error back and update our weights and biases.

To know the appropriate amount to adjust the weights and biases, we need to know the derivative of the loss function with respect to the weights and biases.

Let us recall from the analysis that The derivative of a function is the slope of the function.

If we have a derivative, then we can simply update the weights and biases by increasing/decreasing them (see diagram above). It is called .

However, we cannot directly calculate the derivative of the loss function with respect to weights and biases because the loss function equation does not contain weights and biases. So we need a chain rule to help with the calculation.

Phew! This was cumbersome, but it allowed us to get what we needed — the derivative (slope) of the loss function with respect to the weights. Now we can adjust the weights accordingly.

Let's add the backpropagation function to our Python code:

Checking the operation of the neural network

Now that we have our full code in Python to perform forward and back propagation, let's look at our neural network as an example and see how it works.

Ideal set of scales

Ideal set of scales Our neural network must learn the ideal set of weights to represent this function.

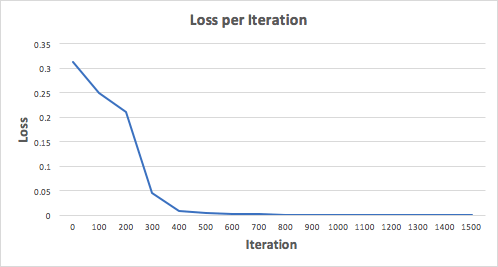

Let's train the neural network for 1500 iterations and see what happens. Looking at the iteration loss plot below, we can clearly see that the loss monotonically decreases to a minimum. This is consistent with the gradient descent algorithm we discussed earlier.

Let's look at the final prediction (output) from the neural network after 1500 iterations.

We did it! Our forward and backpropagation algorithm has shown that the neural network performs successfully, and the predictions converge on the true values.

Note that there is a slight difference between the predictions and the actual values. This is desirable because it prevents overfitting and allows the neural network to better generalize to unseen data.

Final Thoughts

I learned a lot in the process of writing my own neural network from scratch. Although libraries deep learning, such as TensorFlow and Keras, allow the creation deep networks without full understanding internal work neural network, I find it useful for aspiring Data Scientists to gain a deeper understanding of them.

I invested a lot of my personal time in this work, and I hope you find it useful!

Keras is a popular deep learning library that has contributed greatly to the commercialization of deep learning. Keras is easy to use and allows you to create neural networks with just a few lines of Python code.

In this article, you will learn how to use Keras to create a neural network that predicts how users will rate a product based on their reviews, classifying it into two categories: positive or negative. This task is called sentiment analysis (sentiment analysis), and we'll solve it with the help of the movie review site IMDb. The model we will build can also be applied to solve other problems after minor modifications.

Please note that we will not go into detail about Keras and deep learning. This post is intended to provide a schematic in Keras and introduce its implementation.

- What is Keras?

- What is sentiment analysis?

- Dataset IMDB

- Data Exploration

- Data preparation

- Model creation and training

What is Keras?

Keras is an open source Python library that makes it easy to create neural networks. The library is compatible with , Microsoft Cognitive Toolkit, Theano and MXNet. Tensorflow and Theano are the most commonly used Python numerical frameworks for developing deep learning algorithms, but they are quite difficult to use.

Assessing the popularity of frameworks machine learning in 7 categories

Assessing the popularity of frameworks machine learning in 7 categories Keras, on the other hand, provides simple and convenient way creating deep learning models. Its creator, François Chollet, developed it in order to speed up and simplify the process of creating neural networks as much as possible. He focused on extensibility, modularity, minimalism, and Python support. Keras can be used with GPU and CPU; it supports both Python 2 and Python 3. Keras Google has made a major contribution to the commercialization of deep learning and because it contains state-of-the-art deep learning algorithms that were previously not only inaccessible, but also unusable.

What is sentiment analysis (sentiment analysis)?

Sentiment analysis can be used to determine a person's attitude (i.e., mood) toward text, an interaction, or an event. Therefore, sentiment analysis belongs to the field of natural language processing, in which the meaning of the text must be deciphered to extract sentiment and sentiment from it.

Example of a sentiment analysis scale

Example of a sentiment analysis scale The mood spectrum is usually divided into positive, negative and neutral categories. Using sentiment analysis, you can, for example, predict the opinions of customers and their attitude towards a product based on the reviews they write. Therefore, sentiment analysis is widely applied to reviews, surveys, texts and much more.

IMDb dataset

Reviews on IMDb

Reviews on IMDb The IMDb dataset consists of 50,000 movie reviews from users, labeled as positive (1) and negative (0).

- Reviews are pre-processed and each one is encoded with a sequence of word indices as integers.

- Words in reviews are indexed by their overall frequency of occurrence in the dataset. For example, the integer "2" encodes the second most frequently used word.

- The 50,000 reviews are divided into two sets: 25,000 for training and 25,000 for testing.

The dataset was created by researchers at Stanford University and presented in a 2011 paper, in which the achieved prediction accuracy was 88.89%. The dataset was also used as part of a Keggle community competition "Bag of Words Meets Bags of Popcorn" in 2011.

Importing Dependencies and Retrieving Data

Let's start by importing the necessary dependencies to preprocess the data and build the model.

%matplotlib inline import matplotlib import matplotlib.pyplot as plt import numpy as np from keras.utils import to_categorical from keras import models from keras import layers

Let's load the IMDb dataset, which is already built into Keras. Since we don't want to have 50/50 training and testing data, we will immediately merge this data after loading for later splitting in a ratio of 80/20:

From keras.datasets import imdb (training_data, training_targets), (testing_data, testing_targets) = imdb.load_data(num_words=10000) data = np.concatenate((training_data, testing_data), axis=0) targets = np.concatenate((training_targets , testing_targets), axis=0)

Data Exploration

Let's study our dataset:

Print("Categories:", np.unique(targets)) print("Number of unique words:", len(np.unique(np.hstack(data)))) Categories: Number of unique words: 9998 length = print ("Average Review length:", np.mean(length)) print("Standard Deviation:", round(np.std(length))) Average Review length: 234.75892 Standard Deviation: 173.0

You can see that all the data falls into two categories: 0 or 1, which represents the sentiment of the review. The entire dataset contains 9998 unique words, the average size The review is 234 words with a standard deviation of 173.

Let's look at a simple way to learn:

Print("Label:", targets) Label: 1 print(data)

Here you see the first review from the dataset, which is marked as positive (1). The following code converts the indices back into words so we can read them. In it, every unknown word is replaced with “#”. This is done using the function get_word_index().

Index = imdb.get_word_index() reverse_index = dict([(value, key) for (key, value) in index.items()]) decoded = " ".join() print(decoded) # this film was just brilliant casting location scenery story direction everyone"s really suited the part they played and you could just imagine being there robert # is an amazing actor and now the same being director # father came from the same scottish island as myself so i loved the fact there was a real connection with this film the witty remarks throughout the film were great it was just brilliant so much that i bought the film as soon as it was released for # and would recommend it to everyone to watch and the fly fishing was amazing really cried at the end it was so sad and you know what they say if you cry at a film it must have been good and definitely this was also # to the two little boys"s that played the # of Norman and Paul they were just brilliant children are often left out of the # list i think because the stars that play them all grown up are such a big profile for the whole film but these children are amazing and should be praised for what they have done don"t you think the whole story was so lovely because it was true and was someone's life after all that was shared with us all

Data preparation

It's time to prepare the data. We need to vectorize each review and fill it with zeros so that the vector contains exactly 10,000 numbers. This means that we fill every review that is shorter than 10,000 words with zeros. This is done because the most great review is almost the same size, and each element of the input data to our neural network should be the same size. You also need to convert the variables to type float.

Def vectorize(sequences, dimension = 10000): results = np.zeros((len(sequences), dimension)) for i, sequence in enumerate(sequences): results = 1 return results data = vectorize(data) targets = np. array(targets).astype("float32")

Let's divide the dataset into training and testing sets. The training set will consist of 40,000 reviews, the testing set will consist of 10,000.

Test_x = data[:10000] test_y = targets[:10000] train_x = data train_y = targets

Model creation and training

Now you can create a simple neural network. Let's start by defining the type of model we want to create. There are two types of models available in Keras: sequential and functional API.

Then you need to add input, hidden and output layers. To prevent overfitting, we will use an exception between them ( "dropout"). Note that you should always use an exclusion rate between 20% and 50%. Each layer uses a function "dense" to completely connect the layers to each other. In hidden layers we will use "relu", because this almost always leads to satisfactory results. Don't be afraid to experiment with other activation functions. At the output layer, we use a sigmoid function that renormalizes values in the range from 0 to 1. Note that we set the size of the input dataset elements to 10,000 because our reviews are up to 10,000 integers in size. The input layer accepts elements with size 10,000 and outputs elements with size 50.

Finally, let Keras output short description the model we just created.

# Input - Layer model.add(layers.Dense(50, activation = "relu", input_shape=(10000,))) # Hidden - Layers model.add(layers.Dropout(0.3, noise_shape=None, seed=None) ) model.add(layers.Dense(50, activation = "relu") model.add(layers.Dropout(0.2, noise_shape=None, seed=None)) model.add(layers.Dense(50, activation = "relu ")) # Output- Layer model.add(layers.Dense(1, activation = "sigmoid"))model.summary() model.summary() _________________________________________________________________ Layer (type) Output Shape Param # ======= ===================================================== ======== dense_1 (Dense) (None, 50) 500050 _________________________________________________________________ dropout_1 (Dropout) (None, 50) 0 _________________________________________________________________ dense_2 (Dense) (None, 50) 2550 _________________________________________________________________ dropout_2 (Dropout) (None, 50) 0 _________________________________________________________________ dense_3 (Dense) (None, 50) 2550 _________________________________________________________________ dense_4 (Dense) (None, 1) 51 ============= ====================================== Total params: 505,201 Trainable params: 505,201 Non-trainable params : 0 _________________________________________________________________

Now we need to compile our model, that is, essentially set it up for training. We will use optimizer "adam". An optimizer is an algorithm that changes weights and biases during training. As loss functions We use binary cross-entropy (since we work with binary classification), accuracy as an evaluation metric.

Model.compile(optimizer = "adam", loss = "binary_crossentropy", metrics = ["accuracy"])

Now we can train our model. We will do this with a batch size of 500 and only two epochs as I found out that the model begins to retrain, if you train it longer. The batch size determines the number of elements that will be distributed throughout the network, and the epoch is one pass through all the elements of the dataset. Usually larger size party leads to more fast learning, but not always to fast convergence. Smaller size batches train more slowly, but can converge faster. The choice of one or the other definitely depends on the type of problem being solved, and it is better to try each of them. If you are new to this, I would suggest you first use lot size 32, which is a kind of standard.

Results = model.fit(train_x, train_y, epochs= 2, batch_size = 500, validation_data = (test_x, test_y)) Train on 40000 samples, validate on 10000 samples Epoch 1/2 40000/40000 [======= =======================] - 5s 129us/step - loss: 0.4051 - acc: 0.8212 - val_loss: 0.2635 - val_acc: 0.8945 Epoch 2/2 40000 /40000 [=============================] - 4s 90us/step - loss: 0.2122 - acc: 0.9190 - val_loss: 0.2598 - val_acc: 0.8950

Let's evaluate the model's performance:

Print(np.mean(results.history["val_acc"])) 0.894750000536

Great! Our simple model has already broken the accuracy record in a 2011 article mentioned at the beginning of the post. Feel free to experiment with network parameters and the number of layers.

The complete model code is given below:

Import numpy as np from keras.utils import to_categorical from keras import models from keras import layers from keras.datasets import imdb (training_data, training_targets), (testing_data, testing_targets) = imdb.load_data(num_words=10000) data = np.concatenate( (training_data, testing_data), axis=0) targets = np.concatenate((training_targets, testing_targets), axis=0) def vectorize(sequences, dimension = 10000): results = np.zeros((len(sequences), dimension) ) for i, sequence in enumerate(sequences): results = 1 return results data = vectorize(data) targets = np.array(targets).astype("float32") test_x = data[:10000] test_y = targets[:10000 ] train_x = data train_y = targets model = models.Sequential() # Input - Layers model.add(layers.Dense(50, activation = "relu", input_shape=(10000,))) # Hidden - Layers model.add( layers.Dropout(0.3, noise_shape=None, seed=None)) model.add(layers.Dense(50, activation = "relu")) model.add(layers.Dropout(0.2, noise_shape=None, seed=None) ) model.add(layers.Dense(50, activation = "relu")) # Output- Layer model.add(layers.Dense(1, activation = "sigmoid")) model.summary() # compiling the model model. compile(optimizer = "adam", loss = "binary_crossentropy", metrics = ["accuracy"]) results = model.fit(train_x, train_y, epochs= 2, batch_size = 500, validation_data = (test_x, test_y)) print( "Test-Accuracy:", np.mean(results.history["val_acc"]))

Results

You learned what sentiment analysis is and why Keras is one of the most popular deep learning libraries.

We created a simple neural network with six layers that can calculate the sentiment of movie reviewers with 89% accuracy. Now you can use this model to analyze binary sentiments in other sources, but to do this you will have to make their size equal to 10,000 or change the parameters of the input layer.

This model (with minor modifications) can be applied to other machine learning problems.

Neural networks are created and trained mainly in Python. Therefore, it is very important to have a basic understanding of how to write programs in it. In this article I will briefly and clearly talk about the basic concepts of this language: variables, functions, classes and modules.

The material is intended for people unfamiliar with programming languages.

First you need to install Python. Then you need to install a convenient environment for writing programs in Python. The portal is dedicated to these two steps.

If everything is installed and configured, you can start.

Variables

Variable- a key concept in any programming language (and not only in them). The easiest way to think of a variable is as a box with a label. This box contains something (a number, a matrix, an object, ...) that is valuable to us.

For example, we want to create a variable x that should store the value 10. In Python code creating this variable will look like this:

On the left we we announce a variable named x. This is equivalent to putting a name tag on the box. Next comes the equals sign and the number 10. The equals sign plays an unusual role here. It does not mean that "x equals 10". Equality in in this case puts the number 10 in the box. To put it more correctly, we assign variable x is the number 10.

Now, in the code below we can access this variable and also perform various actions with it.

You can simply display the value of this variable on the screen:

X=10 print(x)

print(x) represents a function call. We will consider them further. The important thing now is that this function prints to the console what is located between the brackets. Between the brackets we have x. Previously, we assigned x the value 10. This is what 10 is printed in the console if you run the program above.

Various simple operations can be performed with variables that store numbers: addition, subtraction, multiplication, division and exponentiation.

X = 2 y = 3 # Addition z = x + y print(z) # 5 # Difference z = x - y print(z) # -1 # Product z = x * y print(z) # 6 # Division z = x / y print(z) # 0.66666... # Exponentiation z = x ** y print(z) # 8

In the code above, we first create two variables containing 2 and 3. Then we create a variable z that stores the result of the operation on x and y and prints the results to the console. This example clearly shows that a variable can change its value during program execution. So, our variable z changes its value as many as 5 times.

Functions

Sometimes it becomes necessary to perform the same actions over and over again. For example, in our project we often need to display 5 lines of text.

“This is a very important text!”

“This text cannot be read”

“The mistake in the top line was made on purpose”

"Hello and goodbye"

"End"

Our code will look like this:

X = 10 y = x + 8 - 2 print("This is a very important text!") print("This text cannot be read") print("The error in the top line was made on purpose") print("Hello and bye") print (“The End”) z = x + y print(“This is a very important text!”) print(“This text cannot be read”) print(“The mistake in the top line was made on purpose”) print(“Hello and bye”) print ("The End") test = z print("This is a very important text!") print("This text cannot be read") print("The mistake in the top line was made on purpose") print("Hello and bye") print(" End")

It all looks very redundant and inconvenient. In addition, there was an error in the second line. It can be fixed, but it will have to be fixed in three places at once. What if these five lines are called 1000 times in our project? And everything is in different places and files?

Especially for cases where you need to frequently execute the same commands, you can create functions in programming languages.

Function- a separate block of code that can be called by name.

A function is defined using the def keyword. This is followed by the name of the function, then parentheses and a colon. Next, you need to list, indented, the actions that will be performed when the function is called.

Def print_5_lines(): print("This is a very important text!") print("This text cannot be read") print("The error in the top line was made on purpose") print("Hello and bye") print("The End")

Now we have defined the print_5_lines() function. Now, if in our project we once again need to insert five lines, then we simply call our function. It will automatically perform all actions.

# Define the function def print_5_lines(): print("This is a very important text!") print("This text cannot be read") print("The error in the top line was intentional") print("Hello and bye") print(" End") # Our project code x = 10 y = x + 8 - 2 print_5_lines() z = x + y print_5_lines() test = z print_5_lines()

Convenient, isn't it? We have seriously improved the readability of the code. In addition, functions are also good because if you want to change some of the actions, then you just need to correct the function itself. This change will work in all places where your function is called. That is, we can correct the error in the second line of the output text (“no” > “no”) in the body of the function. Correct option will be automatically called in all places in our project.

Functions with parameters

It is certainly convenient to simply repeat several actions. But that is not all. Sometimes we want to pass some variable to our function. This way, the function can accept data and use it while executing commands.

The variables we pass to the function are called arguments.

Let's write simple function, which adds two numbers given to it and returns the result.

Def sum(a, b): result = a + b return result

The first line looks almost the same as regular functions. But between the brackets there are now two variables. This options functions. Our function has two parameters (that is, it takes two variables).

Parameters can be used inside a function just like regular variables. On the second line we create a variable result, which is equal to the sum of the parameters a and b. On the third line we return the value of the result variable.

Now, in further code we can write something like:

New = sum(2, 3) print(new)

We call the sum function and pass it two arguments in turn: 2 and 3. 2 becomes the value of variable a, and 3 becomes the value of variable b. Our function returns a value (the sum of 2 and 3) and we use it to create a new variable, new .

Remember. In the code above, the numbers 2 and 3 are the arguments to the sum function. And in the sum function itself, the variables a and b are parameters. In other words, the variables that we pass to a function when it is called are called arguments. But inside the function, these passed variables are called parameters. In fact, these are two names for the same thing, but they should not be confused.

Let's look at another example. Let's create a function square(a) that takes one number and squares it:

Def square(a): return a * a

Our function consists of just one line. It immediately returns the result of multiplying the parameter a by a .

I think you have already guessed that we also output data to the console using a function. This function is called print() and it prints the argument passed to it to the console: a number, a string, a variable.

Arrays

If a variable can be thought of as a box that stores something (not necessarily a number), then arrays can be thought of as bookshelves. They contain several variables at once. Here is an example of an array of three numbers and one string:

Array =

Here is an example when a variable does not contain a number, but some other object. In this case, our variable contains an array. Each array element is numbered. Let's try to display some element of the array:

Array = print(array)

In the console you will see the number 89. But why 89 and not 1? The thing is that in Python, as in many other programming languages, the numbering of arrays starts from 0. Therefore, array gives us second element of the array, not the first one. To call the first one, you had to write array .

Array size

Sometimes it is very useful to get the number of elements in an array. You can use the len() function for this. It will count the number of elements and return their number.

Array = print(len(array))

The console will display the number 4.

Conditions and cycles

By default, any program simply executes all commands in a row from top to bottom. But there are situations when we need to check some condition, and depending on whether it is true or not, perform different actions.

In addition, there is often a need to repeat almost the same sequence of commands many times.

In the first situation, conditions help, and in the second, cycles help.

Conditions

Conditions are needed to perform two different sets of actions depending on whether the statement being tested is true or false.

In Python, conditions can be written using the if: ... else: ... construct. Let us have some variable x = 10. If x is less than 10, then we want to divide x by 2. If x is greater than or equal to 10, then we want to create another new variable, which is equal to the sum of x and the number 100. This is what the code will look like:

X = 10 if(x< 10): x = x / 2 print(x) else: new = x + 100 print(new)

After creating the variable x, we begin writing our condition.

It all starts with the keyword if (translated from English as “if”). In parentheses we indicate the expression to be checked. In this case, we check whether our variable x is really less than 10. If it is really less than 10, then we divide it by 2 and print the result to the console.

Then comes keyword else , after which begins a block of actions that will be executed if the expression in parentheses after the if is false.

If it is greater than or equal to 10, then we create a new variable, which is equal to x + 100, and also output it to the console.

Cycles

Loops are needed to repeat actions many times. Let's say we want to display a table of the squares of the first 10 natural numbers. It can be done like this.

Print("Square 1 is " + str(1**2)) print("Square 2 is " + str(2**2)) print("Square 3 is " + str(3**2)) print( "Square 4 is " + str(4**2)) print("Square 5 is " + str(5**2)) print("Square 6 is " + str(6**2)) print("Square 7 is " + str(7**2)) print("Square of 8 is " + str(8**2)) print("Square of 9 is " + str(9**2)) print("Square of 10 is " + str(10**2))

Don't be surprised by the fact that we add lines. "beginning of line" + "end" in Python simply means concatenating strings: "beginning of line end". In the same way above, we add the string “The square of x is equal to ” and the result of raising the number to the 2nd power, converted using the function str(x**2).

The code above looks very redundant. What if we need to print the squares of the first 100 numbers? We are tormented to withdraw...

It is for such cases that cycles exist. There are 2 types of loops in Python: while and for. Let's deal with them one by one.

The while loop repeats the necessary commands as long as the condition remains true.

X = 1 while x<= 100: print("Квадрат числа " + str(x) + " равен " + str(x**2)) x = x + 1

First we create a variable and assign the number 1 to it. Then we create a while loop and check if our x is less than (or equal to) 100. If less (or equal) then we perform two actions:

- Output the square x

- Increase x by 1

After the second command, the program returns to the condition. If the condition is true again, then we perform these two actions again. And so on until x becomes equal to 101. Then the condition will return false and the loop will no longer be executed.

The for loop is designed to iterate over arrays. Let's write the same example with the squares of the first hundred natural numbers, but through a for loop.

For x in range(1,101): print("The square of the number " + str(x) + " is " + str(x**2))

Let's look at the first line. We use the for keyword to create a loop. Next, we specify that we want to repeat certain actions for all x in the range from 1 to 100. The range(1,101) function creates an array of 100 numbers, starting with 1 and ending with 100.

Here's another example of iterating through an array using a for loop:

For i in : print(i * 2)

The code above outputs 4 numbers: 2, 20, 200 and 2000. Here you can clearly see how it takes each element of the array and performs a set of actions. Then it takes the next element and repeats the same set of actions. And so on until the elements in the array run out.

Classes and objects

In real life, we operate not with variables or functions, but with objects. Pen, car, person, cat, dog, plane - objects. Now let's start looking at the cat in detail.

It has some parameters. These include coat color, eye color, and her nickname. But that is not all. In addition to the parameters, the cat can perform various actions: purr, hiss and scratch.

We have just schematically described all cats in general. Similar description of properties and actions some object (for example, a cat) in Python is called a class. A class is simply a set of variables and functions that describe an object.

It is important to understand the difference between a class and an object. Class - scheme, which describes the object. The object is her material embodiment. The class of a cat is a description of its properties and actions. The cat object is the real cat itself. There can be many different real cats - many cat objects. But there is only one class of cat. A good demonstration is the picture below:

Classes

To create a class (our cat's schema), you need to write the keyword class and then specify the name of this class:

Class Cat:

Next we need to list the actions of this class (cat actions). Actions, as you might have guessed, are functions defined within a class. Such functions within a class are usually called methods.

Method- a function defined inside a class.

We have already described the cat’s methods verbally above: purring, hissing, scratching. Now let's do it in Python.

# Cat class class Cat: # Purr def purr(self): print("Purrr!") # Hiss def hiss(self): print("Shhh!") # Scratch def scrabble(self): print("Scratch-scratch !")

It's that simple! We took and defined three ordinary functions, but only inside the class.

In order to deal with the incomprehensible self parameter, let’s add one more method to our cat. This method will call all three already created methods at once.

# Cat class class Cat: # Purr def purr(self): print("Purrr!") # Hiss def hiss(self): print("Shhh!") # Scratch def scrabble(self): print("Scratch-scratch !") # All together def all_in_one(self): self.purr() self.hiss() self.scrabble()

As you can see, the self parameter, required for any method, allows us to access methods and variables of the class itself! Without this argument, we would not be able to perform such actions.

Let's now set the properties of our cat (fur color, eye color, nickname). How to do it? In absolutely any class you can define the __init__() function. This function is always called when we create a real object of our class.

In the __init__() method highlighted above, we set our cat's variables. How do we do this? First, we pass 3 arguments to this method, which are responsible for the coat color, eye color and nickname. Then, we use the self parameter to immediately set our cat to the 3 attributes described above when creating an object.

What does this line mean?

Self.wool_color = wool_color

On the left side, we create an attribute for our cat called wool_color, and then we assign this attribute the value that is contained in the wool_color parameter that we passed to the __init__() function. As you can see, the line above is no different from normal variable creation. Only the prefix self indicates that this variable belongs to the Cat class.

Attribute- a variable that belongs to a class.

So, we have created a ready-made cat class. Here is his code:

# Cat class class Cat: # Actions to be performed when creating a "Cat" object def __init__(self, wool_color, eyes_color, name): self.wool_color = wool_color self.eyes_color = eyes_color self.name = name # Purr def purr( self): print("Purrr!") # Hiss def hiss(self): print("Shhh!") # Scratch def scrabble(self): print("Scratch-scratch!") # All together def all_in_one(self) : self.purr() self.hiss() self.scrabble()

Objects

We created a cat diagram. Now let's create a real cat object using this scheme:

My_cat = Cat("black", "green", "Zosya")

In the line above, we create a variable my_cat and then assign it an object of class Cat. This all looks like a call to some function Cat(...) . In fact, this is true. With this entry we call the __init__() method of the Cat class. The __init__() function in our class takes 4 arguments: the self class object itself, which does not need to be specified, as well as 3 more different arguments, which then become attributes of our cat.

So, using the line above we have created a real cat object. Our cat has the following attributes: black fur, green eyes and the nickname Zosya. Let's print these attributes to the console:

Print(my_cat.wool_color) print(my_cat.eyes_color) print(my_cat.name)

That is, we can access the attributes of an object by writing the name of the object, putting a dot and indicating the name of the desired attribute.

The cat's attributes can be changed. For example, let's change our cat's name:

My_cat.name = "Nyusha"

Now, if you display the cat’s name in the console again, you will see Nyusha instead of Zosia.

Let me remind you that our cat’s class allows her to perform certain actions. If we pet our Zosya/Nyusha, she will begin to purr:

My_cat.purr()

Executing this command will print the text “Purrr!” to the console. As you can see, accessing an object's methods is just as easy as accessing its attributes.

Modules

Any file with a .py extension is a module. Even the one in which you are working on this article. What are they needed for? For comfort. A lot of people create files with useful functions and classes. Other programmers connect these third-party modules and can use all the functions and classes defined in them, thereby simplifying their work.

For example, you don't need to waste time writing your own functions to work with matrices. It is enough to connect the numpy module and use its functions and classes.

At the moment, other Python programmers have written over 110,000 different modules. The numpy module mentioned above allows you to quickly and conveniently work with matrices and multidimensional arrays. The math module provides many methods for working with numbers: sines, cosines, converting degrees to radians, etc., etc....

Installing the module

Python is installed along with a standard set of modules. This set includes a very large number of modules that allow you to work with mathematics, web requests, read and write files and perform other necessary actions.

If you want to use a module that is not included in the standard set, you will need to install it. To install the module, open the command line (Win + R, then enter “cmd” in the field that appears) and enter the command into it:

Pip install [module_name]

The module installation process will begin. When it completes, you can safely use the installed module in your program.

Connecting and using the module

The third-party module is very easy to connect. You only need to write one short line of code:

Import [module_name]

For example, to import a module that allows you to work with mathematical functions, you need to write the following:

Import math

How to access a module function? You need to write the name of the module, then put a dot and write the name of the function/class. For example, factorial 10 is found like this:

Math.factorial(10)

That is, we turned to the factorial(a) function, which is defined inside the math module. This is convenient, because we do not need to waste time and manually create a function that calculates the factorial of a number. You can connect the module and immediately perform the required action.

- Translation

What is the article about?

Personally, I learn best with small working code that I can play with. In this tutorial, we will learn the backpropagation algorithm using a small neural network implemented in Python as an example.Give me the code!

X = np.array([ ,,, ]) y = np.array([]).T syn0 = 2*np.random.random((3,4)) - 1 syn1 = 2*np.random.random ((4,1)) - 1 for j in xrange(60000): l1 = 1/(1+np.exp(-(np.dot(X,syn0)))) l2 = 1/(1+np. exp(-(np.dot(l1,syn1)))) l2_delta = (y - l2)*(l2*(1-l2)) l1_delta = l2_delta.dot(syn1.T) * (l1 * (1-l1 )) syn1 += l1.T.dot(l2_delta) syn0 += X.T.dot(l1_delta)Too compressed? Let's break it down into simpler parts.

Part 1: Small toy neural network

A neural network trained through backpropagation attempts to use input data to predict output data.Let's say we need to predict what the output column will look like based on the input data. This problem could be solved by calculating the statistical correspondence between them. And we would see that the left column is 100% correlated with the output.

Backpropagation, in its simplest case, calculates statistics like these to create a model. Let's try.

Neural network in two layers

import numpy as np # Sigmoid def nonlin(x,deriv=False): if(deriv==True): return f(x)*(1-f(x)) return 1/(1+np.exp(-x )) # input data set X = np.array([ , , , ]) # output data y = np.array([]).T # make the random numbers more specific np.random.seed(1) # initialize the weights to random way with mean 0 syn0 = 2*np.random.random((3,1)) - 1 for iter in xrange(10000): # forward propagation l0 = X l1 = nonlin(np.dot(l0,syn0)) # How wrong were we? l1_error = y - l1 # multiply this with the slope of the sigmoid # based on the values in l1 l1_delta = l1_error * nonlin(l1,True) # !!! # update the weights syn0 += np.dot(l0.T,l1_delta) # !!! print "Output after training:" print l1Output after training: [[ 0.00966449] [ 0.00786506] [ 0.99358898] [ 0.99211957]]

Variables and their descriptions.

"*" - element-wise multiplication - two vectors of the same size multiply the corresponding values, and the output is a vector of the same size

"-" – element-wise subtraction of vectors

x.dot(y) – if x and y are vectors, then the output will be a scalar product. If these are matrices, then the result is matrix multiplication. If the matrix is only one of them, it is the multiplication of a vector and a matrix.

- compare l1 after the first iteration and after the last

- look at the nonlin function.

- look how l1_error changes

- parse line 36 - the main secret ingredients are collected here (marked!!!)

- parse line 39 - the entire network is preparing precisely for this operation (marked!!!)

Let's break down the code line by line

import numpy as npImports numpy, a linear algebra library. Our only addiction.

Def nonlin(x,deriv=False):

Our nonlinearity. This particular function creates a “sigmoid”. It matches any number with a value from 0 to 1 and converts numbers into probabilities, and also has several other properties useful for training neural networks.

If(deriv==True):

This function can also produce the derivative of the sigmoid (deriv=True). This is one of its beneficial properties. If the function's output is an out variable, then the derivative will be out * (1-out). Effective.

X = np.array([ , …

Initializing the input data array as a numpy matrix. Each line is a training example. Columns are input nodes. We end up with 3 input nodes in the network and 4 training examples.

Y = np.array([]).T

Initializes output data. ".T" – transfer function. After translation, the y matrix has 4 rows with one column. As with the input data, each row is a training example, and each column (one in our case) is an output node. It turns out that the network has 3 inputs and 1 output.

Np.random.seed(1)

Thanks to this, the random distribution will be the same every time. This will allow us to more easily monitor the network after making changes to the code.

Syn0 = 2*np.random.random((3,1)) – 1

Network weight matrix. syn0 means "synapse zero". Since we only have two layers, input and output, we need one weight matrix that will connect them. Its dimension is (3, 1), since we have 3 inputs and 1 output. In other words, l0 has size 3 and l1 has size 1. Since we are connecting all nodes in l0 to all nodes in l1, we need a matrix of dimension (3, 1).

Note that it is initialized randomly and the mean is zero. There is a rather complex theory behind this. For now we'll just take this as a recommendation. Also note that our neural network is this very matrix. We have "layers" l0 and l1, but these are temporary values based on the data set. We don't store them. All training is stored in syn0.

For iter in xrange(10000):

This is where the main network training code begins. The code loop is repeated many times and optimizes the network for the data set.

The first layer, l0, is just data. X contains 4 training examples. We will process them all at once - this is called group training. In total we have 4 different l0 lines, but they can be thought of as one training example - at this stage it does not matter (you could load 1000 or 10000 of them without any changes in the code).

L1 = nonlin(np.dot(l0,syn0))

This is the prediction step. We let the network try to predict the output based on the input. Then we'll see how she does it so we can tweak it for improvement.

There are two steps per line. The first one does matrix multiplication of l0 and syn0. The second one passes the output through the sigmoid. Their dimensions are as follows:

(4 x 3) dot (3 x 1) = (4 x 1)

Matrix multiplications require that the dimensions be the same in the middle of the equation. The final matrix has the same number of rows as the first one, and the same number of columns as the second one.

We loaded 4 training examples and got 4 guesses (4x1 matrix). Each output corresponds to the network's guess for a given input.

L1_error = y - l1

Since l1 contains guesses, we can compare their difference with reality by subtracting l1 from the correct answer y. l1_error is a vector of positive and negative numbers characterizing a network “miss”.

And here is the secret ingredient. This line needs to be parsed piece by piece.

First part: derivative

Nonlin(l1,True)

L1 represents these three points, and the code produces the slope of the lines shown below. Note that for large values like x=2.0 (green dot) and very small values like x=-1.0 (purple) the lines have a slight slope. The largest angle at point x=0 (blue). This makes a big difference. Also note that all derivatives range from 0 to 1.

Full expression: error-weighted derivative

L1_delta = l1_error * nonlin(l1,True)

Mathematically, there are more accurate methods, but in our case this one is also suitable. l1_error is a (4,1) matrix. nonlin(l1,True) returns the (4,1) matrix. Here we multiply them element by element, and at the output we also get the matrix (4,1), l1_delta.

By multiplying derivatives by errors, we reduce the errors of predictions made with high confidence. If the slope of the line was small, then the network contained either a very large or very small value. If the network's guess is close to zero (x=0, y=0.5), then it is not particularly confident. We update these uncertain predictions and leave high-confidence predictions alone by multiplying them by values close to zero.

Syn0 += np.dot(l0.T,l1_delta)

We are ready to update the network. Let's look at one training example. In it we will update the weights. Update the leftmost weight (9.5)

Weight_update = input_value * l1_delta

For the leftmost weight it would be 1.0 * l1_delta. Presumably this will only increase the 9.5 slightly. Why? Because the prediction was already quite confident, and the predictions were practically correct. A small error and a slight slope of the line means a very small update.

But since we are doing group training, we repeat the above step for all four training examples. So it looks very similar to the image above. So what does our line do? It counts the weight updates for each weight, for each training example, sums them up and updates all the weights - all in one line.

After observing the network update, let's return to our training data. When both input and output are 1, we increase the weight between them. When the input is 1 and the output is 0, we reduce the weight.

Input Output 0 0 1 0 1 1 1 1 1 0 1 1 0 1 1 0

So in our four training examples below, the weight of the first input relative to the output will either increase or remain constant, and the other two weights will increase and decrease depending on the examples. This effect contributes to network learning based on correlations of input and output data.

Part 2: a more difficult task

Input Output 0 0 1 0 0 1 1 1 1 0 1 1 1 1 1 0Let's try to predict the output data based on three input data columns. None of the input columns are 100% correlated with the output. The third column is not connected to anything at all, since it contains ones all the way. However, here you can see the pattern - if one of the first two columns (but not both at once) contains 1, then the result will also be equal to 1.

This is a non-linear design because there is no direct one-to-one correspondence between the columns. The match is based on a combination of inputs, columns 1 and 2.

Interestingly, pattern recognition is a very similar task. If you have 100 pictures of bicycles and pipes of the same size, the presence of certain pixels in certain places does not directly correlate with the presence of a bicycle or a pipe in the image. Statistically, their color may appear random. But some combinations of pixels are not random - those that form an image of a bicycle (or tube).

Strategy

To combine pixels into something that can have a one-to-one correspondence to the output, you need to add another layer. The first layer combines the input, the second assigns a match to the output using the output of the first layer as input. Pay attention to the table.Input (l0) Hidden weights (l1) Output (l2) 0 0 1 0.1 0.2 0.5 0.2 0 0 1 1 0.2 0.6 0.7 0.1 1 1 0 1 0.3 0.2 0.3 0.9 1 1 1 1 0.2 0.1 0.3 0.8 0

By randomly assigning weights, we get hidden values for layer No. 1. Interestingly, the second hidden weights column already has a small correlation with the output. Not ideal, but there. And this is also an important part of the network training process. Training will only strengthen this correlation. It will update syn1 to assign its mapping to the output, and syn0 to better match the input.

Neural network in three layers

import numpy as np def nonlin(x,deriv=False): if(deriv==True): return f(x)*(1-f(x)) return 1/(1+np.exp(-x)) X = np.array([, , , ]) y = np.array([, , , ]) np.random.seed(1) # randomly initialize the weights, on average - 0 syn0 = 2*np.random.random ((3,4)) - 1 syn1 = 2*np.random.random((4,1)) - 1 for j in xrange(60000): # go forward through layers 0, 1 and 2 l0 = X l1 = nonlin(np.dot(l0,syn0)) l2 = nonlin(np.dot(l1,syn1)) # how much were we wrong about the required value? l2_error = y - l2 if (j% 10000) == 0: print "Error:" + str(np.mean(np.abs(l2_error))) # which way should you move? # if we were confident in the prediction, then we don’t need to change it much l2_delta = l2_error*nonlin(l2,deriv=True) # how much do the values of l1 affect the errors in l2? l1_error = l2_delta.dot(syn1.T) # in which direction should we move to get to l1? # if we were confident in the prediction, then we don’t need to change it much l1_delta = l1_error * nonlin(l1,deriv=True) syn1 += l1.T.dot(l2_delta) syn0 += l0.T.dot(l1_delta)Error:0.496410031903 Error:0.00858452565325 Error:0.00578945986251 Error:0.00462917677677 Error:0.00395876528027 Error:0.00351012256786

Variables and their descriptions

X is the matrix of the input data set; strings - training examplesy – matrix of the output data set; strings - training examples

l0 – the first network layer defined by the input data

l1 – second layer of the network, or hidden layer

l2 is the final layer, this is our hypothesis. As you practice, you should get closer to the correct answer.

syn0 – the first layer of weights, Synapse 0, combines l0 with l1.

syn1 – The second layer of weights, Synapse 1, combines l1 with l2.

l2_error – network error in quantitative terms

l2_delta – network error, depending on the confidence of the prediction. Almost identical to error, except for confident predictions

l1_error – by weighing l2_delta with weights from syn1, we calculate the error in the middle/hidden layer

l1_delta – network errors from l1, scaled by the confidence of predictions. Almost identical to l1_error, except for confident predictions

The code should be fairly clear - it's just the previous implementation of the network, stacked in two layers, one on top of the other. The output of the first layer l1 is the input of the second layer. There is something new only in the next line.

L1_error = l2_delta.dot(syn1.T)

Uses errors weighted by the confidence of predictions from l2 to calculate the error for l1. We get, one might say, an error weighted by contributions - we calculate how much the values at nodes l1 contribute to the errors in l2. This step is called backpropagation. We then update syn0 using the same algorithm as the two-layer neural network.